Overview of Skilltype’s approach to A11Y

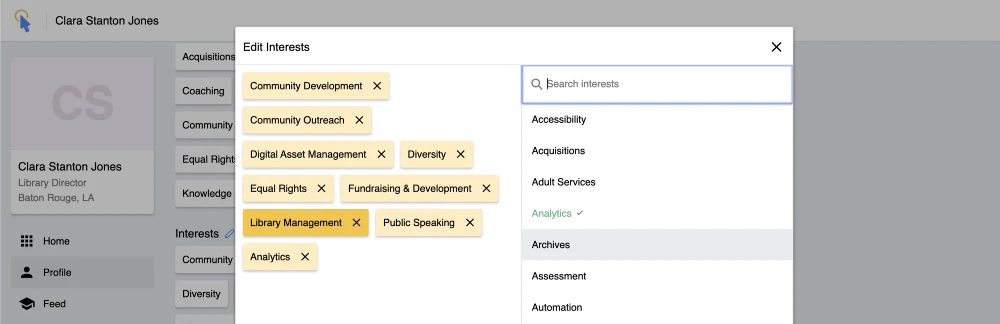

Screenshot of Skilltype’s tagPicker UI element, v1.4.70

Inclusion at the Core of Skilltype

At the heart of Skilltype is a spirit of inclusion. We know that information professionals — those who work in libraries, who conduct scholarly research, who manage knowledge systems in our institutions — are vital to the survival and dissemination of truth. We make tools to help these professionals do their job well, and it’s important that our tools invite, include, and serve all those who might benefit from their use. That’s why we are committed to making our software accessible to users of all levels of ability.The following posts describe the considerations and challenges we faced when developing with accessibility in mind, describes some architectural strategies for building accessible components in React, and then walks through accessibility implementations in two of our components, MenuBar and TagListPicker.

Part 1: Good A11Y is Good UX

Written by Paul Hine, Lead App Developer at Skilltype

We’re not Accessibility experts. We’ve read a lot of blog posts about Accessibility and looked through various standards documents, but we don’t claim to have the depth of experience of, say, an Accessibility professional. However, we weren’t going to let that be an excuse to “leave accessibility for later,” which is a tempting path¹. Instead, we decided to approach accessibility (A11Y) the way we approach user experience (UX). None of us have degrees in UX or call ourselves UX professionals, but like most teams building apps for web and mobile, UX is a top priority in our software design and we spend a lot of time discussing and testing it.

Mining Sensibilities for Inclusive Design

Thinking about A11Y as UX is not trivial but it can be intuitive. The first step in our UX design is always mining our own sensibilities as web users. We ask ourselves questions like “does this make sense?”, “what would I expect to happen here”, “is this too much information on one screen”, etc. Then, we use those sensibilities to make some best guesses that inform our interface design and behavior. We can do the same thing with A11Y with a little extra effort — first, we have to build some sensibility around using A11Y features by using the web the way someone with disabilities would.

We began by familiarizing ourselves with the types of disabilities some of our users may have. The A11Y Project identifies four broad categories of accessibility: Visual, Auditory, Motor and Cognitive². Each has its own specific set of guidelines and considerations, but there is also a lot of overlap. For example, if keyboard interaction is well-designed on your site, it will assist users with visual impairments using standard keyboard navigation as well as motor-impaired users using special control apparati. So, for the purposes of this post, we’ll focus on a blind or visually impaired user, but many of the analyses and strategies here can inform design and development for other accessibility needs.

Approaching Accessibility as User Experience

The W3C Web Accessibility Initiative (WAI) site provides some useful personas for users with disabilities³. Here’s an excerpt from their persona for Ilya, a senior staff member (of a fictitious organization) who is blind:

Ilya is blind. She is the chief accountant at an insurance company that uses web-based documents and forms over a corporate intranet and like many other blind computer users, she does not read Braille.

Ilya uses a screen reader and mobile phone to access the web. Both her screen reader and her mobile phone accessibility features provide her with information regarding the device’s operating system, applications, and text content in a speech output form.

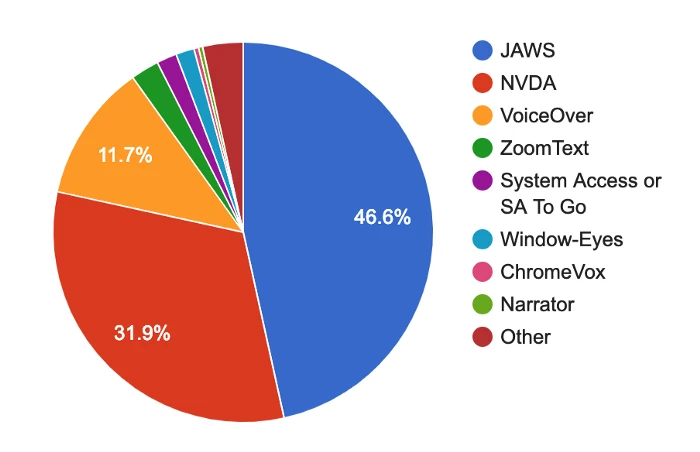

We know that responsible UX/UI design must address site or app functionality in the various browsers and platforms where users will encounter our work “in the wild” (Chrome, Firefox, iOS, Android, etc), so our next step was to identify the screen readers that our visually impaired users would use on our web app. For this, we looked to WebAIM, non-profit organization out of Utah State University that compiles data every year on screen reader usage and demographics. We learned that the vast majority (> 80%) of users with disabilities used 3 screen readers: JAWS, NVDA and VoiceOver⁴. As JAWS is a commercial product with a non-trivial pricetag ($90/year), we opted to start with the free applications.

Bridging the Accessibility Gap

To educate ourselves, we watched some videos of users with disabilities actually using screen readers to interact with applications like email on their computers⁵ and phones⁶, as well as navigate and use web sites like reddit⁷ (and even playing Mortal Kombat⁸). We then installed and/or activated these applications on our own computers and spent some time using our computers and phones to browse the web.

At first, it’s incredibly awkward and slow to do anything, especially on the phone, where the gestural metaphors are nearly all repurposed. One vlogger on Youtube who is blind and demoing screen readers on her phone even warns, “don’t activate VoiceOver on your iPhone until you know how to use it, or you won’t be able to turn it off”⁶. But after a few hours of experimentation and Googling, you should be able to proficiently navigate a website with only your ears and the keyboard. It’s very important to reach this level of proficiency with screen readers — you must begin to build a design intuition for alternative forms of interaction; over time, as you design, build, and test accessible interfaces, this intuition will mature and, ideally, be on par with your sense for visual design and usability.

In Part 2, we’ll look at how React is particularly well suited for architecting accessible apps.

¹ “Start with Accessibility.” — https://www.w3.org/WAI/EO/wiki/Start_with_Accessibility

² A11Y Project. https://a11yproject.com/

³ W3C A11Y Stories. https://www.w3.org/WAI/people-use-web/user-stories

⁴ WebAIM Survey. https://webaim.org/projects/screenreadersurvey7/

⁵ Edison, Tommy. “How A Blind Person Uses A Computer.” https://www.youtube.com/watch?v=UzffnbBex6c

⁶ Burke, Molly. “How I use technology as a blind person! — Molly Burke (CC).” https://www.youtube.com/watch?v=TiP7aantnvE

⁷ Minor, Ross Minor. “How Blind People Use Computers.” https://www.youtube.com/watch?v=rsglR8Y26jU

⁸ Minor, Ross. “How I play Mortal Kombat X Completely Blind.” https://www.youtube.com/watch?v=vivZNuUih7I